For astrophotography of deep space objects like galaxies and nebulae, I would say little is to be gained from better than 2 arc-sec per pixel. Even at 2 arc seconds other factors play a significant role in useful resolution. How stable are your skies? What size aperture is your scope. How good is your mount over the integration period? How good is your alignment? 2 arc seconds is a rule of thumb.

It is doubtful that around Syracuse that the sky is better than 2 arc-sec and you can’t correct for it – without adaptive optics. A 4″ aperture scope has – at best – about 1 arc-sec resolution. Camera lenses – even the best ones – generally do not achieve this diffraction limit. Pointing to 1 arc-sec accuracy over a 5 min integration time is also pretty difficult.

Now what is lost by having a finer resolution? Best case is you simply waste processing time on a resolution that you will never see. When imagining, there is the light we want – from a deep sky object – and the light we get but don’t want – light pollution, natural aurora, sun light scattering on dust between earth and mars. The image processing we do doesn’t add light to an image as much as it improves the contrast between the light we want and the light we don’t. The processing removes the average the non-desired light, leaving unpredictable variations. The more time accumulated from a stack of images, the higher the ratio of the desired light to the variations of the undesired light. (I am getting to a point in a minute).

If we image with a finer scale what happens? Lets compare 2 cases. First one with 1 arc-sec pixels, and the second with 2 arc-second pixels. Then after processing, bin–binning means combining adjacent pixels into one larger pixel increasing light collection, reducing resolution, and changing the size of the resulting image–4 1 arc-second pixels that cover the same angular area as a single 2 arc-sec pixel. As far as the light signal to noise ratio we collect, there will be no difference. The smaller 1 arc second pixels will have 1/4 of the light gathered compared to the larger 2 arc second pixel, but when you add 4 1 arc second pixels together, the light gathered is the same. Astronomy Tools has a CCD suitability calculator you can use to see the impact of suitability based on different telescope and camera types and settings.

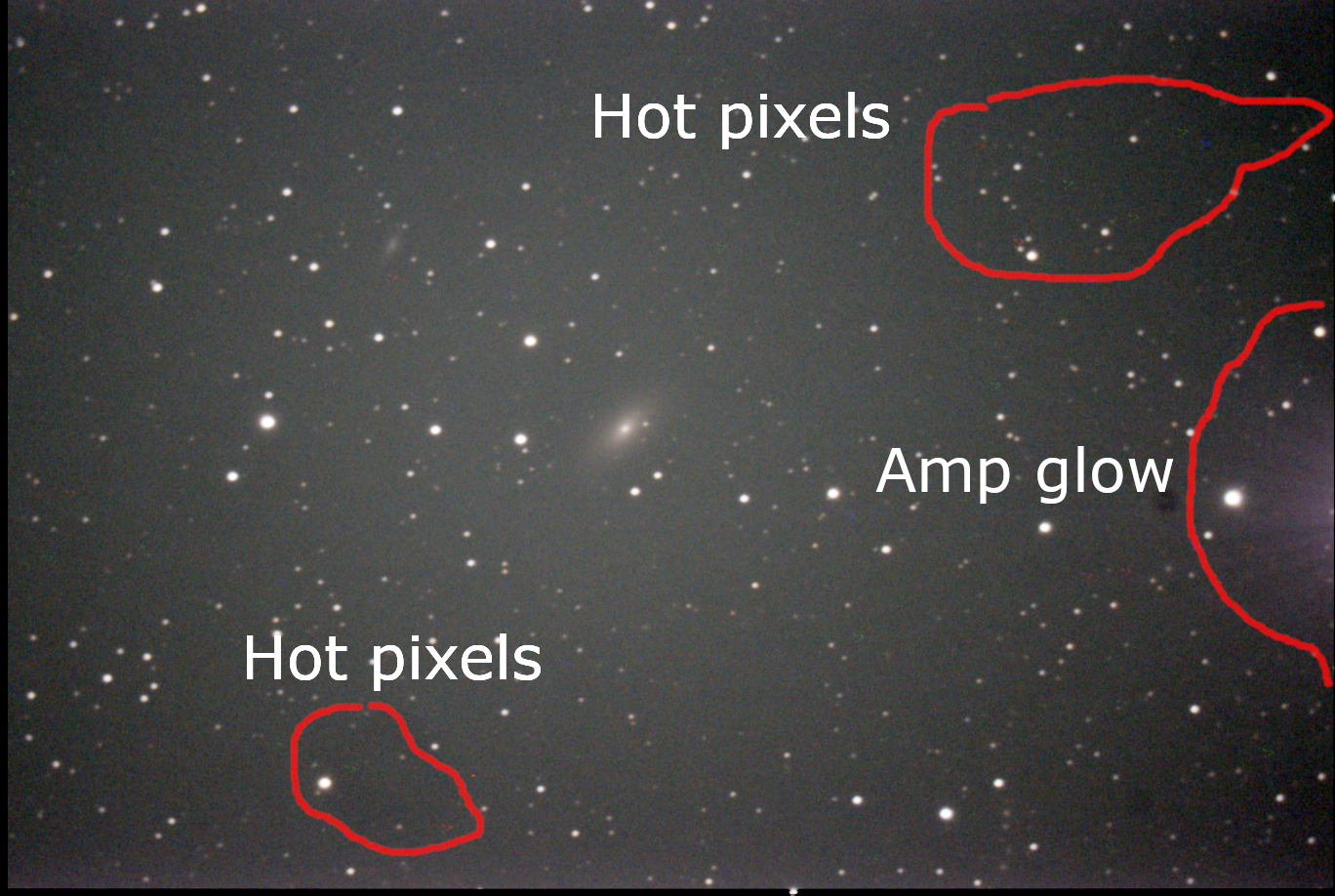

However, that is not the whole story. What about the electronic noise from the sensor? There are 2 kinds of noise. There is amplifier noise from reading the charge on the pixel, and there is a leakage current that fills the pixels with time. The leakage current can be reduced by cooling the sensor. The leakage current can also be mitigated by taking bias frames which measures current and reduces it in image processing.

In the case of above, the amplifier noise will be higher for the 1 arc second pixel example because there are 4 amplifiers reads for every combined pixel vs only 1 read for the 2 arc sec pixel. So more pixels to read can contribute to a worse signal to noise than having fewer pixels to read. With today’s read electronics, the typical read noise is under 5 electrons, and if you are integrating for 5 min, you are likely dominated by background (unwanted) light, and so it shouldn’t make a difference. So for deep space objects, in general, trying to get below 2 arc seconds yields little to no benefit.

Everything up to now is for deep sky imaging. If you are imagining a planet like Jupiter, you would want 1/4 arc-sec resolution. Take movies (1/30 sec exposure or so), throw out the not so great images, pray for great seeing every once and a while, and use those images to integrate.